"Smile, You're Being Processed"

"I'd like to know where the bin with what's left of our dignity is."

This is what a man said to me recently, waiting for his bin after his TSA check at the airport. Thank you, Man! For putting words to what I had been thinking!!

I hadn't flown in awhile.

"You don't fly much, do you," a TSA person scoffed at me, when I asked her what would happen if I refused to go into the radiation tube.

The last time I flew was 2019, before so many things in the world. Before so many things in my head.

This time, they chose ME lucky ME for the extra security check, as I "left" Canada for the USA by plane. I was informed that in addition to the usuals (which I also do not appreciate thank you very much) that I had to be patted down, and that I had to have my bag searched. "I'm going to look in your wallet now, ma'am"...oh, my wallet too. My phone too, if they asked for it I'd surely have to unlock and show them what was in there.

"What happens if I refuse this?"

"Well, you can't fly."

"I can't fly? Why are you treating me like a criminal?" I asked, the line behind me looming large. Did these people find this gross too, or were they just so used to it? "Well, I don't want to do it. What if I refuse?"

"Well, you have to go all the way back to the initial check-in and ask them. Hang on, let me get someone else to talk with you," the lady said, she was nice here on the Vancouver side, at least.

Along comes this guy, younger than me, suit and tie, all that, to tell me the rules are the rules. To remind me that (apparently) the USA is the only country that requires this special extra, and I was chosen randomly, and I could be flying to anywhere else in the world and I would NOT be subject to this.

"I know, I know you didn't make the rules."

"I don't like it either," he said.

"Yeah, well. I just think more people should make a stink about it, so that's why I'm doing that. Go ahead."

I mean, if I didn't, someone else would just have to, and I didn't want to just pass it on. I just wanted to make a point, to stop the mindless following of insane orders for just a small portion of time, to show people that hey this is messed UP!

As the lady was searching my bag, rifling through my unmentionables, she pulled out this pair of scissors. "Oh, I'm sorry about that, I forgot they were in there!" I said. "Oh, it's ok, these are fine," and---she put them back in!

What the f--- are they LOOKING for?! Apparently sharp scissors are...fine. Good thing I didn't have any liquids in there though. Or some way to get into the cockpit. Or whatever else.

The pat down was unpleasant, but not too pushy. I know this because on the way BACK I was NOT chosen for a special check but I DID ask if I could avoid the radiation thing and be wand-ed instead (someone had told me that was a less radiation-full option)--and that lady took FAR too much sadistic pleasure in demeaning me with her assault like poking prodding thoroughness. I asked her, "Hey, can't you just wave me with the wand?" "Honey," her wrinkled cigarette hardened face glared, "We stopped doing that 10 years ago. You CHOSE this."

Did I want a private room, by the way, for the pat downs? No! No I did not. Thank you very much. Go have this done in private??! I needed witnesses. Many of them.

Remember back in 2003ish when this all started? I wasn't paying all that much attention but I do think we were told it would be a temporary measure. Just, you know, until some stuff was sorted out.

As I almost got into the USA from Canada (the USA portion of the airport, that is), after my extra special security check, there was one more step we all had to do, which involved some kind of eye scan while we showed our boarding pass and passport again. No one asked me, they just did it. Then, they spit me out into the airport mall, where I could suddenly be lulled from my rage via soothing shopping music, perfumes and liquor for sale, Starbucks.

I wasn't. I wasn't lulled from my rage. It didn't work, see here I am over a week later and, yeah.

On the way back, from the USA back onto an airplane, they were awful-er, even though I wasn't even targeted for special treatment. The only nice guy was the guy at the boarding pass place, prior to the assault. I watched him get boarding passes and check passports, then say, "Is it ok if I take your picture?" --next to the sign that offered an opt-out option for the picture. Everyone just had their photo taken.

When it was my turn and he asked me I said, "Well it says here that I can opt out. So I'm opting out." He broke out of his robotic smiles and paused, looked at me, said, "Ok, well you do know that you've already had your photo taken by probably 50 different cameras by this time," and I said, "Well, yeah I'm sure I have. And did I consent to any of that? No, no I didn't! But I have the option here so I'll say NO," and he was fine with it.

I used to think all this was just the way things had to be, just "for our safety". But there's got to be another way. We are herded around like cattle, treated like criminals. And some might say well who cares, if you don't have anything to hide you should be fine with this. If you're not doing anything wrong then what's the problem with being...spied on constantly. Wasn't there a movie a few years back, about East Germany surveillance in the 80s? The Lives of Others? And weren't we all supposed to be just ohhhh so glad the "good guys won" and those days were over, and just ohhhh so glad that the good old USofA and other "freedom" loving "democracies" didn't do bad horrible unethical stuff like that? (Ha!) And so what, about the radiation booth, some might say. Like the lady who scoffed at my radiation concerns said, "Well you DO know that your phone emits far more radiation than this does," -- take THAT (said her eyes). "Ummm, and that's supposed to make me feel better?" I wanted to scream it back to everyone still waiting in line, checking their phones. But I didn't.

Look it up, radiation in smart phones. Apparently they're all ohhhh pretty sure it's probably ok for our health and no significant health concerns have been detected. Remember when doctors used to promote smoking? Is the sun radiation? Ok sure yeah it is, but humans have lived with the sun for, well do I really need to say it? Am I a "scientist"? An "expert"? Do I have any grounds whatsoever to speak on such a subject? Can we actually safeguard all of our whole lives and know for certain anything? No, no we can't. But I think we're all just being experimented on, whilst being treated like animals to be herded around. And I used to be a vegetarian who protested meat not because it was meat but because of the way factory farming treated animals. (I do eat it now, we are inconsistent (hypocritical) confused and torn creatures and I am no different. I do still hold disdain for factory farming and try (not-hard-enough) to avoid supporting it.)

Anyway. TSA. Airport security.

To some out there I might just sound like some kind of privileged person, complainy-pants, "freedom" want-er. Fine. I'm not special, they're treating us all this way, and we all have voices, we all could say something. Maybe I could've been treated worse, if I was a different kind of person and complained? Maybe. Maybe I could be detained just like anyone else could be, cause freedom doesn't apply at TSA. Security overrides. Move it along. Just going to push on your groin, shove on it. Feel you up in front of all these people--you don't like the radiation tube? You have...concerns? Just going to search your wallet. Just going to empty your bag. Just doing to take you to this room. You want to visit your family, don't you?

20+ years later and now see the below:

I've copied the following article from the link here, if you'd rather go directly to it. It's from a newsletter I get called Reclaim the Net, and this article is written by Christina Mass. I've highlighted some portions for effect, or if you don't want to read the whole thing.

From Boarding Pass to Bio-ID: Airports Become Frontlines in the Surveillance State |

|

It

starts with a smile. Not the fake kind you give at family gatherings,

but the dead-eyed, forced expression you contort into at an airport

scanner. Congratulations:

your face is now your boarding pass, passport, ID, and, if things keep

going this way, probably your tax return too. The biometric revolution

has arrived, and it’s dressed in the sleek, sterile uniform of

“efficiency.” Gone

are the days when you clutched a passport like a nervous toddler grips a

teddy bear. That humble little booklet, once the final shred of analog

dignity in a digital world, is being replaced by something far more

convenient and, coincidentally, far more dystopian. It’s called the Digital Travel Credential, and it wants your face. Not metaphorically. Literally. For over a century, international travel was simple. Present ID. Get stamp. Move

along. But apparently, pulling out a booklet became too laborious for

the high-speed, app-saturated modern traveler. So, governments, never

one to miss an opportunity to embed another layer of surveillance under

the pretense of modernization, decided to cut out the middleman and scan

your biometric soul instead. The

pitch is seductive. Faster lines. Fewer documents. No fumbling at

kiosks like a confused raccoon. Singapore, ever the overachieving

student of the techno-authoritarian classroom, has already flung the

doors wide open. Over 1.5 million people have glided through Changi

Airport without flashing a single document, their identities confirmed

by algorithms trained to spot tired humans in neck pillows. Not

to be left behind, a coalition of governments including Finland,

Canada, the UAE, and the US are playing their own game of biometric

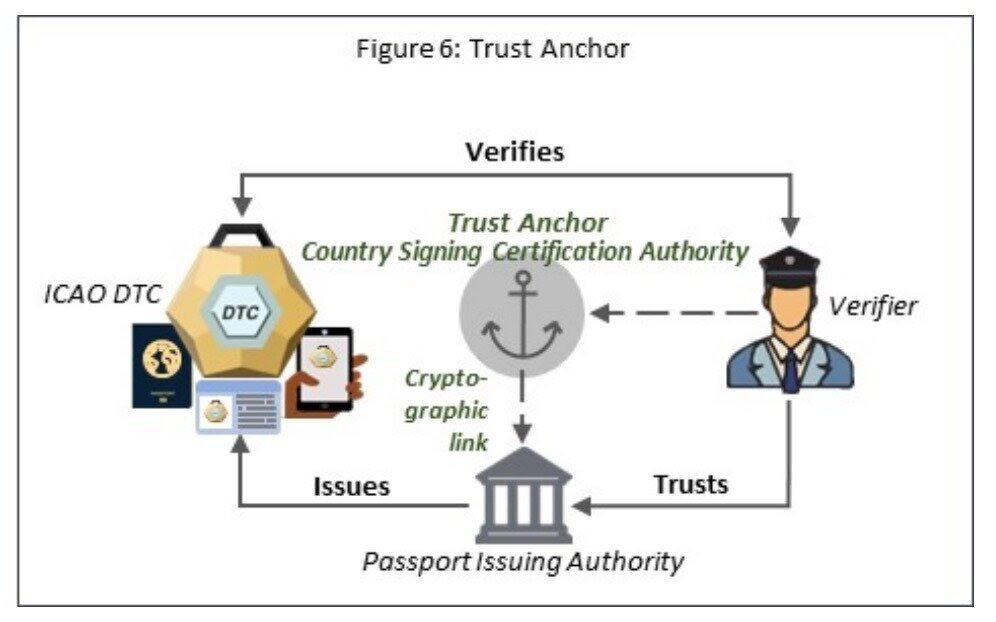

leapfrog. Trials of the Digital Travel Credential, blessed by the United

Nations’ International Civil Aviation Organization (ICAO), are

happening now. Think of it as a global beta test for turning airports

into facial recognition carnivals. |

|

|

ICAO, ever the cheerleader for dystopian chic, promises the DTC will create “seamless travel experiences.” They boast,

“Authorities can verify a digital representation of the passport data

before the traveler’s arrival and confirm the data’s integrity and

authenticity.” In Finland, the whole check-in process reportedly takes

eight seconds. It

sounds almost magical. But like most magic tricks, the payoff comes at a

price. This one just happens to be your face, permanently stored,

compared, tracked, and (hopefully) not sold to the highest bidder. And

if that makes you feel a little uneasy, don’t worry. You’re not alone.

Privacy advocates, those annoying people who keep insisting on “rights”

and “transparency,” are sounding alarms. Once your biometric data is scooped up at the airport, it doesn’t just vanish into a puff of digital smoke. It lives on, tucked into databases that are as secure as a screen door in a hurricane. Different countries have different rules about what can be done with your data, which is a polite way of saying: that no one knows where your face will end up. Smile, You’re Being Processed The

irony is that while the ICAO and its member nations dress this up as a

leap into the future, it’s really a backdoor to the past; a time when

governments could track your every move, only now they can do it in

high-definition and real-time. All

of this is happening with the usual cocktail of corporate jargon and

governmental spin. The idea isn’t just to make travel easier, it’s to

make surveillance look like customer service. A frictionless experience.

Just smile for the scanner, collect your baggage, and forget you ever

had privacy. No

one voted for this. That’s the fun part. Nobody held a referendum

asking, “Would you like to exchange your fingerprint and face for the

privilege of slightly faster boarding?” It just happened, rolled out

through pilot programs, tech partnerships, and quiet bilateral

agreements that sound like airport lounge cocktail napkin deals. By the

time the average traveler noticed, their face was already in a database

marked “trusted traveler.” The

beauty of biometric surveillance, from a bureaucrat’s perspective, is

how easily it sidesteps democratic input. There’s no need to pass a

controversial law or hold a heated debate on civil liberties when you

can bury the whole thing under layers of tech jargon and point to

improved efficiency metrics. “Look,” they say, “fewer lines at

Heathrow.” Just don’t ask what else got streamlined. And

let’s not pretend this stays confined to airports. Once facial

recognition becomes normalized at border control, it trickles outward

like a leaky faucet; into train stations, stadiums, shopping centers,

and eventually into your daily routine. That metro gate that reads your

face “for your convenience” is also quietly logging your commute. Is

that retail store using facial recognition to prevent theft? It’s

building a profile on your purchasing habits. The line between security

and surveillance dissolves like sugar in tea. Meanwhile,

oversight bodies are playing a game of regulatory whack-a-mole, always a

few years behind the tech, and even further behind the corporations

building it. Governments subcontract these systems to private companies,

then act shocked when a breach occurs or when data ends up being

monetized in creative, morally flexible ways. The

public? They’re told it’s all part of a smarter, safer society. Don’t

worry, your data is encrypted. Stored securely. Accessed only by

authorized personnel. These are the same promises that preceded every

major data breach in the last decade. If you’re wondering whether the

people who leaked your Social Security number can be trusted with your

biometric blueprint, the answer rhymes with “no.” The Future Is Contactless. So Is Consent Consent,

that pesky relic of liberal democracy, doesn’t stand a chance in the

world of biometric ID. You don’t opt in. You comply. Or you don’t

travel. There’s no real alternative being offered. No physical passport

line is labeled “non-biometric idealists this way.” Just cameras. Gates.

And that cheerful beep that confirms you’ve been scanned, validated,

and filed into the machine. This

is the endgame of convenience culture: a society where efficiency

crushes deliberation, where identity is reduced to code, and where the

tools of control are dressed up like luxury features. Once the system

exists, it expands. Because that’s what systems do. Ask anyone in a

country where protesting too loudly gets you flagged, followed, or

worse. Whether

it’s a corporate boardroom or a state security agency, the incentive to

misuse this data is hardwired into the structure. Control is power.

Knowing where people are, who they associate with, what they do, that’s

leverage. That’s surveillance capitalism with a badge. And

here’s the bitter punchline: by the time enough people start to notice,

it’ll be too late to opt-out. The infrastructure will be everywhere.

The databases will be built. The cameras are installed. Your biometric

ID, already in use for everything from boarding flights to accessing

public services, will become the de facto key to life itself. You’ll

smile at the scanner because you have no choice. And the machine will

smile back, not out of politeness, but because it just won. Biometric Data in the US: A Legal Wild West Land

of the free? Sure; free for corporations and government contractors to

scoop up your face, your fingerprints, maybe even your kid’s thumbprint

from their public school cafeteria. The protections? A few scattered

state laws, a lot of shrugs, and a regulatory framework that looks like

it was assembled during a coffee break at a Silicon Valley hackathon. Europe,

despite being chained together by bureaucracies that move at the speed

of molasses, somehow managed to produce the GDPR, a sweeping but

admittedly flawed regulation that actually treats personal data like it

matters. Meanwhile, across the Atlantic, the US response has been a

resounding, “Good luck out there.” Only

three states: Illinois, Texas, and Washington, have dared to pass

anything resembling a biometric privacy law. And of those, Illinois’

Biometric Information Privacy Act (BIPA) is the only one with real

teeth. Under BIPA, companies have to ask before they harvest your face.

What a concept. Better yet, if they screw it up, you can sue them.

Naturally, tech companies hate it with the intensity of a thousand data

breaches. Everywhere

else? It’s open season. Your gym can scan your face. Your workplace can

track your keystrokes and heartbeat. Your grocery store can log your

gait. And unless you live in one of those three states, there’s not much

you can do if that data gets passed around like a bowl of chips at a

Superbowl party. This

vacuum of federal regulation isn’t a bug. It’s the business model.

Companies like Clearview AI thrive in it. These digital bounty hunters

have scraped billions: yes, with a B, of images off the internet to

create face recognition tools sold to law enforcement. No consent. No

disclosure. Just “innovation.” Airports,

of course, have become the glittering showroom for this surveillance

bonanza. “Public-private partnerships” is the polite term for the

arrangement where government agencies team up with private tech firms to

build facial recognition systems. What they don’t tell you is how your

biometric data might be passed around between CBP, TSA, airlines, and

who-knows-what third-party contractors running server maintenance in

Arkansas. No

federal law means no mandated expiration date, no clear purpose

limitation, and no real accountability. Your face might board you onto a

plane in Atlanta, and pop up in a law enforcement database two years

later in Nevada. Don’t ask how it got there. You’ll be told it’s

“classified.” Function Creep: When Biometric Convenience Becomes a Surveillance Trap Once

your face is in the system, it tends to stay there. And not just for

the reason you were told. That’s the essence of function creep; the

bureaucratic sleight of hand where a tool introduced for convenience

becomes a surveillance Swiss Army knife. At

first, facial recognition was sold to travelers as a nifty time-saver.

Scan and go. But now those same systems are being fused with criminal

databases, immigration enforcement records, and intelligence watchlists.

What started as a convenience at the gate becomes a digital dragnet

with you at the center. US

Customs and Border Protection admits that images collected during

travel can be shared with “other government entities.” That’s

government-speak for: we don’t have to tell you who, why, or for how

long. Over

in India, they’ve taken this tech carnival to new heights. The Digi

Yatra program, pitched as a “voluntary” biometric boarding system, is

being casually extended to hotels and tourist sites. Because when you're

checking into a heritage monument, what you really want is the same

surveillance system used to process you at the airport. This

isn’t an exception. It’s the trajectory. Biometric infrastructure is

designed to grow. One minute it’s used to speed up check-ins. The next

it’s identifying who attended a protest, whether a tenant is behind on

rent, or if a student skipped class. The

technology doesn’t creep. It expands. It metastasizes. And without hard

legal boundaries, it will continue to spread into every corner of civic

life. All of this, of course, under the saccharine branding of

“streamlining” and “user experience.” The tech companies get to

innovate. The government gets to surveil. You get to consent

retroactively. One Face, Infinite Uses Function

creep thrives in silence. Most people don’t realize that when they

agree to a face scan at the airport, they might also be opting into an

indefinite biometric record stored God knows where. They’re not told

whether that data will be deleted, shared, or sold. Because nobody wants

to admit that your face, once captured, becomes a permanent access

point to your identity. This

is the great bait-and-switch of biometric convenience. It sells you

freedom of movement while delivering the architecture of surveillance.

It promises personalization while delivering profiling. It offers speed

while extracting your most intimate identifiers for use in systems you

can’t see and wouldn’t agree to if you could. Without

enforceable limits and real accountability, biometric tech won’t stop

at the airport. It will follow you into schools, offices, stores, parks,

and eventually, into the very fabric of your daily life. And if you ever dare to push back, the cameras will be watching. You

can’t opt out of a society where your body has become your ID. You can

only hope someone in charge remembers that rights are supposed to mean

something, even when you’re just trying to catch a flight. Biometric Glitches: When the Tech Gets Your Face, and Your Identity, Wrong Facial

recognition is pitched as a marvel of modern efficiency; no ID, no

problem, just scan and go. But behind the curtain of high-tech wizardry

is a system that still can’t reliably tell one human from another. And

when it gets it wrong, the consequences don’t look like a harmless

software hiccup. They look like handcuffs. These

systems are built on the assumption that a few billion faces are all

just unique barcodes waiting to be logged. In reality, they’re clunky,

error-prone, and wildly overconfident in their own precision. It’s one

thing when your phone doesn’t recognize you before coffee. It’s another

when an airport scanner decides you don’t exist, or worse, that you’re

someone else entirely. Case in point: a man in Detroit gets arrested after

a facial recognition system falsely tags him as the suspect in a

surveillance video. The machine makes the call, and suddenly you’re

explaining your whereabouts to a very humorless police officer. Thirty

hours in custody later, the system admits its mistake, but it’s not the

machine spending the night in a holding cell. These

symptoms of a broader problem: systems designed for mass identification

that can’t consistently identify individuals. At the border, this

translates to delays, secondary screenings, and missed flights. In other

parts of life, it can mean being flagged, rejected, or denied services

by a machine that thinks you're someone you’re not; and no, there’s no

“appeal” button. The

underlying issue is painfully simple. These tools are trained on

massive datasets, often scraped together with whatever was cheap and

easy to access. Nobody’s auditing the process. Nobody’s asking what

happens when the system is wrong, because, apparently, algorithms can’t

be held accountable. Tech

firms roll out these systems like they’re launching a new flavor of

soda: high on hype, low on responsibility. They’ll assure you the next

update will fix it. They’ll say accuracy is improving. What they won’t

say is what happens when the wrong person is flagged and a human

authority rubber-stamps the result because “the computer said so.” It’s

a governance failure as much as it is a technical one. Machines are

making high-stakes decisions about who gets on planes, who gets pulled

aside, and who gets arrested. And they’re doing it without any

transparency, without recourse, and often, without a second glance from

the humans supposedly overseeing them. It’s

institutional laziness wrapped in digital mystique. And the more we

rely on these systems, the more we normalize a world where it’s

perfectly acceptable for software to get your identity wrong, and for

you to deal with the fallout. The Chilling Effect of Being Watched: How Biometric Surveillance Warps Public Life Surveillance

doesn’t need to be loud to be effective. It doesn’t need to knock on

your door or show up in your inbox. All it has to do is exist, quietly,

constantly, just out of view. That’s the new architecture of public

life. A camera in every station. A scanner at every gate. A watchful eye

where you least expect it, nudging you into behaving just a little

more... predictably. You

feel it, even if you don’t see it. That nagging sense that you’re being

watched makes you walk straighter. You second-guess that joke you were

about to tell. Maybe you hold off on joining that protest or skip a

meeting just in case someone’s keeping score. That’s the chilling effect

in action; not fear of doing something wrong, but fear of being

misunderstood by a system that doesn’t forgive or forget. Public

spaces are being retrofitted with surveillance systems that not only

record but interpret, deciding who belongs, who moves “suspiciously,”

and who might be worth a closer look. The effect is subtle but

corrosive. People withdraw. They avoid risk. They conform, not out of

guilt, but out of caution. A

town square used to be a place for expression, argument, and

spontaneity. Now it’s a monitored zone where behavior is quietly curated

by software. And when every camera is paired with a system that logs

and tracks you in real-time, public life starts to feel less like a

right and more like a performance. The

real damage isn’t just to privacy, it’s to participation. Surveillance,

especially when wrapped in biometric systems, creates a climate where

people stop acting freely. They start playing it safe. They self-edit. And eventually, the vibrant noise of a free society fades into a controlled murmur. This

is the part that rarely makes it into the press releases from the

companies selling “smart security” solutions. They’ll talk about safety.

They’ll throw out words like “efficiency” and “innovation.” They won’t mention what happens when everyone in public starts acting like they’re walking through an airport terminal: forever. Biometric

surveillance doesn’t only change how we’re seen. It changes how we

live. And if that’s the trade-off, then the question isn’t whether we

need better technology. It’s whether we still remember what public

freedom used to feel like before every street corner started watching. |

Comments

Post a Comment